Phi-4: Microsoft’s Newest Small Language

Details on Phi-4: Microsoft’s Newest Small Language Model Specializing in Complex Reasoning

Created Dec 20, 2024 - Last updated: Dec 20, 2024

Microsoft’s Phi-4 represents a remarkable advancement in the realm of small language models, excelling in complex reasoning tasks while maintaining a compact and efficient architecture. Unlike its larger counterparts, Phi-4 focuses on delivering precise, nuanced outputs for use cases that demand advanced problem-solving capabilities without overwhelming computational resources.

This blog will explore Phi-4’s architecture, benchmarks, practical applications, and step-by-step instructions to deploy it locally or on Azure AI Foundry. Whether you’re an AI enthusiast or a developer looking to harness cutting-edge technology, this guide will provide all the insights you need.

Why Phi-4?

Phi-4 bridges the gap between efficiency and intelligence. Its smaller size makes it:

- Resource-friendly: Suitable for deployment on devices with limited computational power.

- Highly specialized: Designed to excel in tasks requiring logical reasoning and contextual understanding.

- Versatile: Adaptable across various industries, from finance to healthcare and education.

Benchmarks reveal that Phi-4 outperforms models of similar sizes on tasks like:

- Logical deduction

- Multi-hop reasoning

- Contextual understanding

For instance, in a recent benchmark comparing Phi-4 against other models:

Phi-4’s superior accuracy and lower memory footprint make it a standout choice.

Key Features of Phi-4

- Advanced Reasoning

Phi-4 is engineered with enhanced attention mechanisms, enabling it to tackle:

- Complex logical chains.

- Multi-layered contextual problems.

- Compact Size

At less than 1GB of model weights, Phi-4 can operate on devices with limited resources without sacrificing performance.

- Customizability

Fine-tuning Phi-4 for domain-specific tasks is straightforward, making it an ideal candidate for applications in:

- Financial forecasting.

- Legal document analysis.

- Academic research support.

Getting Started with Phi-4

Running Phi-4 Locally

You can run Phi-4 on your local laptop using Microsoft’s open-source implementation. Here are the steps:

Step 1: System Requirements

- OS: Windows 10/11, macOS, or Linux

- RAM: Minimum 8GB (16GB recommended)

- Python: Version 3.8 or higher

Step 2: Ollama Installation

The installation process for Ollama is straightforward and supports multiple operating systems including macOS, Windows, and Linux, as well as Docker environments, ensuring broad usability and flexibility. Below is the installation guide for Windows and macOS platforms.

You can obtain the installation package from the official website or GitHub:

Screenshot of Ollama Download Page

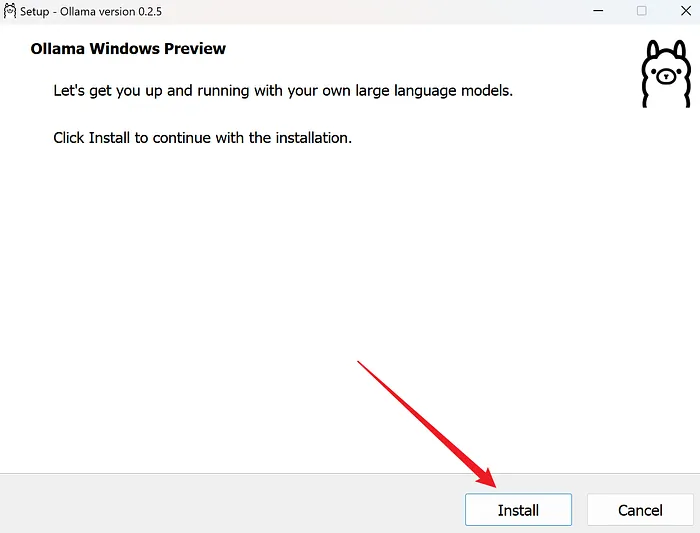

Install Ollama on Windows

Here, we download the installer from the Ollama official website: https://ollama.com/download/OllamaSetup.exe

Run the installer and click Install

Click on Install

The installer will automatically perform the installation tasks, so please be patient. Once the installation process is complete, the installer window will close automatically. Do not worry if you do not see anything, as Ollama is now running in the background and can be found in the system tray on the right side of the taskbar.

Access the model locally via a REST API or a Python client. Example Python code:

Step 3: Download Phi-4Mini Model

Once Ollama is installed, you need to download the Phi-3.5 mini model. You can do this by running:

ollama pull vanilj/Phi-4

Step 4: Run the Model

After downloading the model, you can run it using the following command:

ollama run vanilj/Phi-4

This command will start the model and make it ready for inference.

Step 5: Using the Model

You can now use the model for various tasks. For example, to generate text based on a prompt, you can use:

Visualizing Phi-4’s Impact

Phi-4 performance on math competition problems

Phi-4 outperforms much larger models, including Gemini Pro 1.5, on math competition problems

Phi-4 outperforms much larger models, including Gemini Pro 1.5, on math competition problems (https://maa.org/student-programs/amc/)

To see more benchmarks read the newest technical paper released on arxiv.

Deploying Phi-4 on Azure AI Foundry

Azure AI Foundry offers seamless integration for deploying Phi-4 in production environments

[Azure AI Foundry

Azure AI Foundry

Azure AI Foundryaka.ms](https://aka.ms/phi3-azure-ai?source=post_page-----189d14d70ced--------------------------------)

Real-World Applications

- Education

Phi-4 enables personalized tutoring by solving complex problems in STEM subjects.

- Healthcare

Supports medical professionals in analyzing patient data and generating insights.

- Legal Analysis

Helps lawyers draft contracts and analyze legal documents with precise reasoning.

- Finance

Enhances financial modeling and risk analysis, ensuring accurate predictions.

Enabling AI innovation safely and responsibly

Building AI solutions responsibly is at the core of AI development at Microsoft. We have made our robust responsible AI capabilities available to customers building with Phi models, including Phi-3.5-mini optimized for Windows Copilot+ PCs.

Azure AI Foundry provides users with a robust set of capabilities to help organizations measure, mitigate, and manage AI risks across the AI development lifecycle for traditional machine learning and generative AI applications. Azure AI evaluations in AI Foundry enable developers to iteratively assess the quality and safety of models and applications using built-in and custom metrics to inform mitigations.

Additionally, Phi users can use Azure AI Content Safety features such as prompt shields, protected material detection, and groundedness detection. These capabilities can be leveraged as content filters with any language model included in our model catalog and developers can integrate these capabilities into their application easily through a single API. Once in production, developers can monitor their application for quality and safety, adversarial prompt attacks, and data integrity, making timely interventions with the help of real-time alerts.

Conclusion

Phi-4 is a testament to Microsoft’s commitment to advancing AI capabilities while ensuring accessibility and efficiency. With its compact architecture and robust reasoning abilities, Phi-4 is set to redefine how small language models are utilized across industries.

Whether you’re deploying it locally or scaling it on Azure AI Foundry, Phi-4 offers unparalleled flexibility and performance. Try it today and experience the future of AI-driven reasoning firsthand.